From Abstract Logic to Applied Science: Charting the Historical Trajectory of Artificial Intelligence

The history of artificial intelligence (AI) is not a linear march of progress but a dynamic and often turbulent narrative of profound theoretical leaps, staggering computational achievements, and sobering periods of disillusionment. It is a story that begins with a philosophical question—”Can machines think?”—and culminates in a global technological force that is actively reshaping science, industry, and society. Tracing this evolution from its conceptual origins with Alan Turing to the generative models of the present day reveals a series of critical paradigm shifts: from symbolic reasoning to statistical learning, and now, from analysis to creation. This journey, marked by both intellectual ambition and pragmatic recalibration, provides the essential context for understanding the capabilities and trajectory of modern AI.

The Foundational Epoch: From Turing’s Test to Dartmouth’s Conjecture

The intellectual bedrock of artificial intelligence was laid not in a laboratory but in the abstract realms of mathematics and philosophy. In his seminal 1950 paper, “Computing Machinery and Intelligence,” British mathematician Alan Turing masterfully reframed the ambiguous question of machine thought. [1][2] Instead of engaging in endless philosophical debate, Turing proposed an operational benchmark: the “Imitation Game,” now universally known as the Turing Test. [1][3] The test posits that if a machine can engage in a textual conversation with a human interrogator and be indistinguishable from a human participant, it can be said to exhibit intelligent behavior. [4][5] This was a pivotal move, shifting the focus from defining “thinking” to evaluating performance. Turing further argued that a digital computer, being a universal machine, could theoretically imitate any other discrete-state machine, making it the ideal candidate for achieving this goal. [1][5] He even presciently suggested that the most effective path forward was not to program a machine with adult knowledge, but to create a “child machine” capable of learning from experience. [3][5] This theoretical framework established the core challenge of AI. Six years later, in the summer of 1956, this abstract idea was given a name and a formal mission. The Dartmouth Summer Research Project on Artificial Intelligence, organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, convened the field’s founding minds. [6][7] The workshop’s proposal was built on a bold conjecture: “that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.” [6][7] This event officially christened “artificial intelligence” and launched it as a formal scientific discipline, transforming Turing’s philosophical inquiry into a tangible research agenda. [8][9]

The Cycles of Hype and Winter: From Symbolic Logic to Expert Systems

The decades following the Dartmouth Workshop were characterized by a cyclical pattern of immense optimism followed by sharp periods of correction, known as “AI winters.” [10][11] The initial “AI summer” saw remarkable, albeit limited, successes based on symbolic reasoning—the idea that intelligence could be replicated through the manipulation of symbols via logical rules. Programs like the Logic Theorist demonstrated automated mathematical proofs, and early chatbots like ELIZA simulated human conversation through pattern matching. [12][13] The Shakey the Robot project at Stanford Research Institute integrated perception, reasoning, and action, becoming the first mobile robot to navigate its environment. [14][15] These successes fueled immense hype and significant government funding, particularly from agencies like DARPA. However, researchers vastly underestimated the complexities of real-world problems, especially the challenge of common-sense reasoning and the combinatorial explosion of possibilities in tasks like language translation. [16] When promised breakthroughs failed to materialize, funding was drastically cut in the mid-1970s, initiating the first AI winter. [11][16] From this period of disillusionment, a more pragmatic approach emerged: expert systems. These systems eschewed the grand goal of general intelligence for the focused task of encoding the knowledge of human experts in specific domains, such as medical diagnosis or equipment configuration. [16][17] The commercial success of systems like XCON, which reportedly saved Digital Equipment Corporation $40 million annually, sparked a second AI boom in the 1980s. [16] Yet this too proved unsustainable. Expert systems were brittle, expensive to build and maintain, and the specialized Lisp machine hardware they ran on was soon outpaced by cheaper, more powerful desktop computers, leading to the second AI winter in the late 1980s and early 1990s. [17][18]

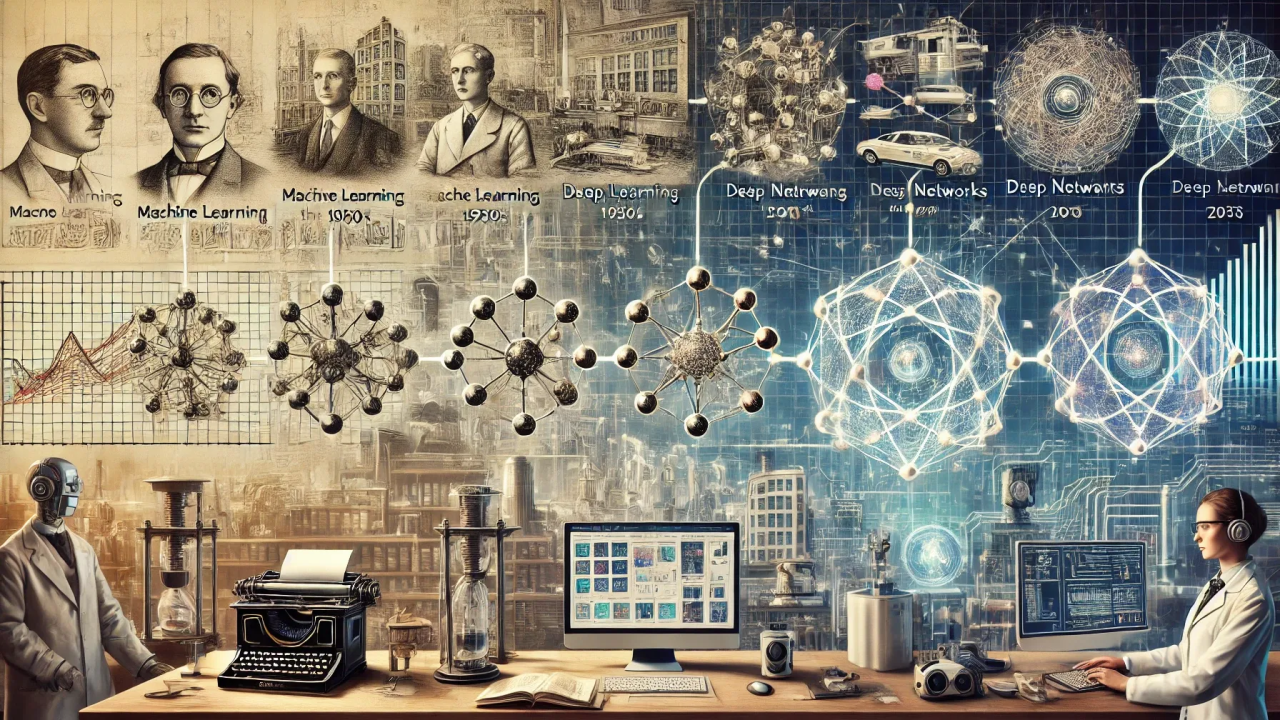

The Data-Driven Renaissance: The Ascendancy of Machine Learning

The end of the second AI winter was not driven by a breakthrough in symbolic logic but by a fundamental paradigm shift toward statistical methods and machine learning. This renaissance was fueled by two converging technological trends: the exponential growth of computational power as described by Moore’s Law and the explosion of digital data generated by the internet. Instead of painstakingly hand-crafting rules, AI systems could now learn patterns directly from vast datasets. This shift was powerfully symbolized by the 1997 chess match between world champion Garry Kasparov and IBM’s Deep Blue supercomputer. [19][20] Deep Blue did not “think” like a human; it won through brute-force computation, evaluating up to 200 million positions per second. [21] Its victory was a landmark event, demonstrating that a data-intensive, computational approach could dominate a domain once considered the pinnacle of human intellect. [20][21] This principle was further advanced by IBM’s Watson, which triumphed on the quiz show Jeopardy! in 2011. [15] Unlike Deep Blue, Watson had to process the complex nuances and ambiguities of natural language, analyzing massive volumes of unstructured text to answer questions with remarkable accuracy. These milestones were not about creating consciousness but about demonstrating the immense power of applying massive computational resources to vast datasets, setting the stage for the next great leap in AI’s evolution. The quiet work on neural networks, particularly by pioneers like Geoffrey Hinton, which had been ongoing since the 1970s, finally found the computational power and data it needed to flourish, leading directly to the deep learning revolution. [14]

The Generative Age: From Understanding Content to Creating It

The current era of AI is defined by the rise of generative models, a direct consequence of the deep learning revolution and a pivotal architectural innovation. In 2017, researchers at Google published the paper “Attention Is All You Need,” which introduced the Transformer architecture. [22][23] This model dispensed with the sequential processing of previous architectures like RNNs and LSTMs, instead using a “self-attention” mechanism that could weigh the importance of all parts of the input data simultaneously. [24][25] This allowed for massive parallelization in training and a far more sophisticated grasp of context, proving to be the key that unlocked the potential of Large Language Models (LLMs). OpenAI’s GPT series, culminating in models like GPT-3, demonstrated an astonishing ability to generate coherent, contextually relevant, and often creative text, effectively passing a specialized version of the Turing Test for a wide array of language tasks. [14] The impact of this generative shift extends far beyond chatbots. It marks AI’s transition from an analytical tool to a creative partner and a catalyst for scientific discovery. The most profound example is DeepMind’s AlphaFold, an AI system that solved the 50-year-old grand challenge of protein structure prediction. [26][27] By accurately predicting the 3D shapes of proteins from their amino acid sequences, AlphaFold has revolutionized biology and drug discovery, a feat recognized with the 2024 Nobel Prize in Chemistry for its key architects, Demis Hassabis and John Jumper. [26][28] This achievement signifies that AI is no longer merely simulating human intelligence but is now augmenting it, solving fundamental problems that were previously intractable and accelerating the pace of scientific progress itself.